One measure of voting district "compactness," or the extent to which the zone boundaries are logically shaped, and the area the zone encompasses is contiguous, is called the Polsby-Popper measure. This formula gives the ratio of the zone's area to a circle with the circumference of the zone's perimeter. The idea is that zones with oddly, convoluted shaped boundaries will produce a lower "score" on this measure, and those with smooth and adjacent boundaries will produce one that is higher. The sense that zones do not divide existing political boundaries, namely counties, is another measure that provides an idea of the extent to which these political districts divide existing communities. The direct measure of this can be provided by a count of the counties divided by Congressional Districts, and further a count of those that divide the greatest number of counties.

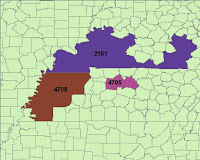

The images above represent two different types of oddly-shaped, non-contiguous districts- the ones on the left result from the natural shape of the areas in question, and the ones on the right are the result of gerrymandering. As one might imagine, the districts on the right scored very low on the Polsby-Popper measure. The haphazard construction and re-drawing of these boundaries changes the nature of the population contained, and, as mentioned, can greatly influence election results. And, if one party does it, the other must necessarily follow suit, and the cycle continues, seemingly ad infinitum... as is apparently the nature of American democracy today.